At the recent Hot Pod Summit in Brooklyn, Adobe introduced a revolutionary tool signalling a new era in music artistry. The announcement featured Project Music GenAI Control – a platform capable of generating audio content per user’s textual request, for instance, “cheerful dance music” or “sad jazz”, and deep customization.

Currently undergoing research, the development spearheaded by researchers from the University of California (UC) and Carnegie Mellon (UMC) lacks a user interface. Still, Gautham Mysore, the head of Adobe’s AI research in the audio and video sector, emphasized that it will allow even non-composers to bring their ideas to life as a conductor of a virtual orchestra.

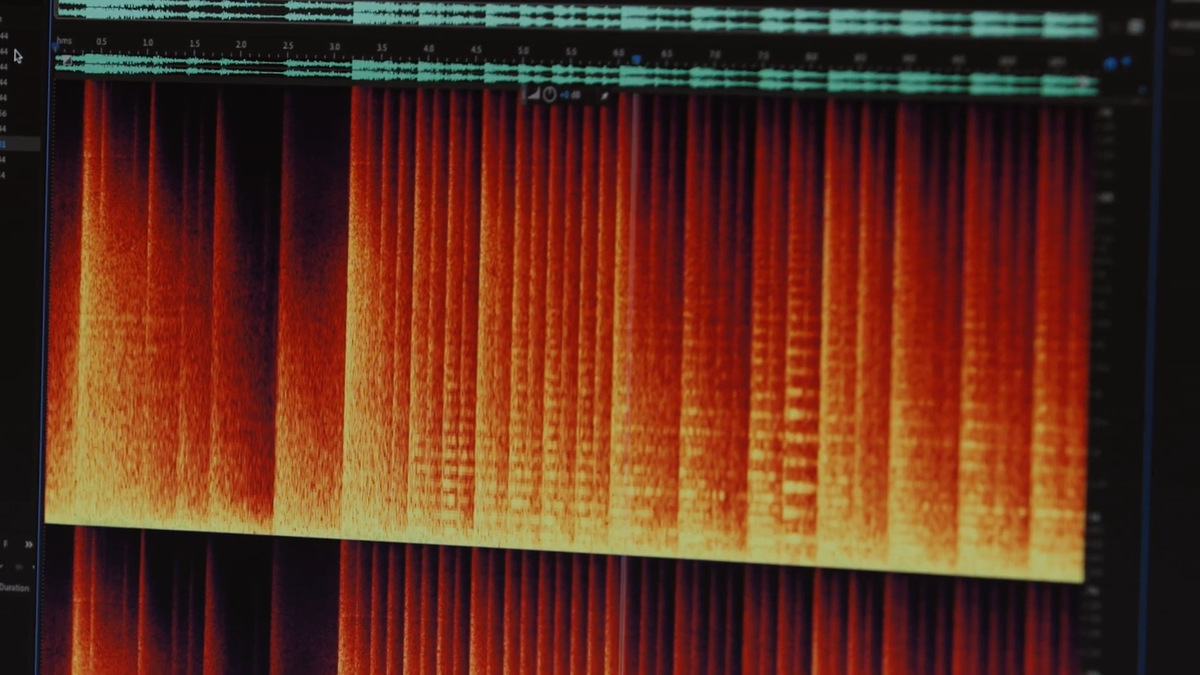

Project Music GenAI Control offers users tools to customize the tempo, intensity, repeating patterns, and structure of music tracks. It opens up doors for experimentation with unlimited music fragments or remixes, thereby broadening human creative freedom.

However, the infusion of AI in music production isn’t without technological and ethical challenges. The increasing interest in AI-created tracks raises questions regarding copyright infringement and authenticity of musical works. A recent U.S federal court ruling that AI-created art can’t be copyright-protected further intensifies the need for clear legal frameworks for using such technologies.

Adobe aims to tackle these issues by grounding their tools on licensed data or those in the public domain to avoid intellectual property infringements. The company is also developing a watermark technology for unequivocally identifying music created via Project Music GenAI Control.