Ambient Diffusion: An AI Learning System Protecting Copyrights

Artificial Intelligence (AI) models, which generate images based on textual descriptions, pose the risk of breaching copyrights as they can “remember” original images. To safeguard against lawsuits by copyright holders, an innovative system called Ambient Diffusion was launched, which trains AI models solely on distorted data.

The Functionality and Advantage of Diffusion Models

Diffusion models are advanced machine learning algorithms that generate high-quality items. They slowly add noise to a data set, then reverse the process. Studies reveal that these models can memorize samples from their training set – a feature with potential adverse repercussions for privacy, security, and copyrights. For instance, when trained with X-ray images, AI should not memorize specific patient images.

Ambient Diffusion: A Solution for Confidentiality Issues

To circumvent such problems, scholars from the University of Texas at Austin and University of California, Berkeley developed the Ambient Diffusion framework. This trains diffusion AI models exclusively on images distorted to unrecognizability, making it nearly impossible for the AI to “memorize” and reproduce the original work.

Testing Penned with Positive Results

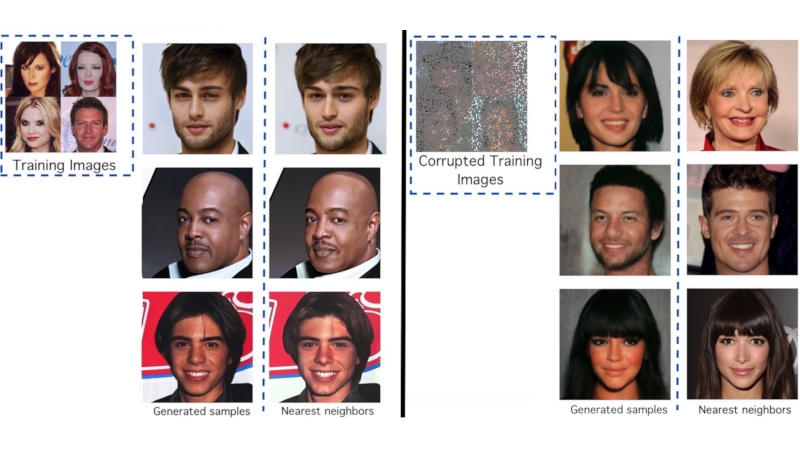

The researchers trained the AI model with 3000 celebrity images from the CelebA-HQ database to test their hypothesis. Upon receiving requests, this model started generating images nearly identical to their originals. The researchers retrained the model using 3000 images with extreme distortions, where up to 90% of pixels were masked. Following this, the model generated realistic human faces significantly different from the original images. The source code of the project is available on GitHub.