Nvidia Dominates Accelerator Market as Intel and AMD Strive to Compete

Nvidia currently dominates the accelerator computing industry used for training large language models, controlling over 90% of the market segment, as per experts from IoT Analytics. Notwithstanding their concerted efforts, rival firms Intel and AMD have struggled to establish successful competing solutions.

Nvidia’s Market Share Towers Above Rivals

AMD’s share in the server-grade GPU market is estimated to be 3%, whereas Nvidia’s share exceeds 90% as per a Nikkei Asian Review. In comparison, while AMD earned $6.5 billion last year through server components sales, Nvidia reported earnings of $47.5 billion. In the last quarter alone, Nvidia’s revenues reached $22.6 billion, exceeding last year’s corresponding period by 427%. Over the same period, AMD’s revenues were $2.3 billion, representing an 80% increase. Intel’s performance slightly outflanked AMD’s, realizing $15.5 billion in server segment revenues last year and $3 billion this quarter—an increase of just 5%.

The Struggle For Second Position

AMD’s Server Business Head, Andrew Dieckmann, acknowledged Nvidia’s market dominance and emphasized the importance of proving to the customers that AMD is a stable “number two” in the market.

Client PC Segment and Growth Opportunities

Meanwhile, in the client PCs segment, optimisation for Microsoft Copilot is proceeding fastest for Qualcomm processors, providing an opportunity for its market share to grow. Intel estimates that by 2028, up to 80% of PCs sold will support AI acceleration. The company aims to deliver over 40 million compatible central processors this year and more than 100 million units in the next. AMD, however, is not rushing to reveal any expansion forecasts but is certainly not lacking ambition. Counterpoint Research warned that the PC market, already saturated with numerous players and offerings, won’t grow immediately to $1 trillion annually and will remain highly fragmented with low growth rates initially.

Shaking Nvidia’s Dominance

Nvidia’s competitors have a chance to disrupt the company in the supply of compute accelerators used for logical inference rather than language model training. These operations require less computational power and energy, an area where Intel and AMD could gain a foothold according to Bank of America analysts. Intel representatives believe that AI systems will reach market penetration when corporate customers show interest in solutions that facilitate logical inference in data processing. Having expressed interest in this market segment, Intel plans to start shipping Gaudi 3 accelerators this year, hoping to achieve best total cost of ownership.

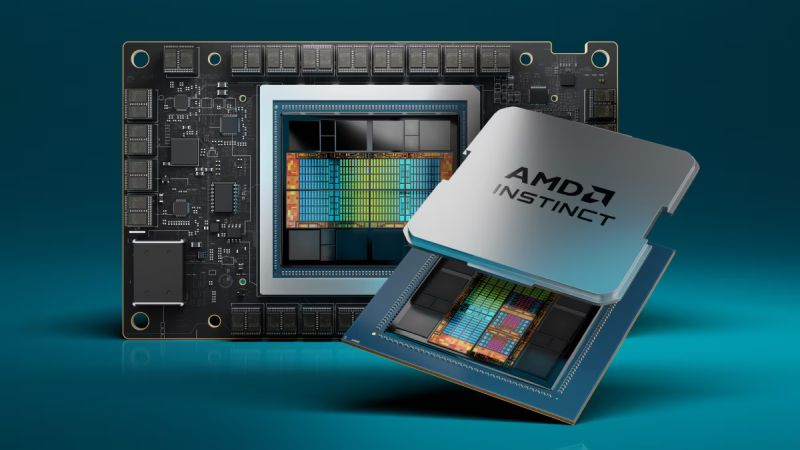

AMD’s Strategy in AI Market

AMD also considers the logical inference segment to offer more earning opportunities. The company’s Instinct MI300X solution boasts a high-performance memory subsystem without employing HBM3E chips used in Nvidia’s H200 accelerators. AMD’s chip design allows more memory to be housed in an accelerator than Nvidia can accommodate, and this advantage will increase with HBM3E’s adoption.

Nvidia’s Profitable Venture

Nvidia isn’t lagging either. Its accelerators are increasingly used in AI systems focusing on logical inference. Over the past four quarters, such accelerators have contributed up to 40% of Nvidia’s server segment revenues. According to Nvidia CEO Jensen Huang, even with competitors’ accelerators given away for free, Nvidia’s offerings would still be more cost-effective due to lower operating expenses.