Nvidia recently announced enhancements to their GeForce RTX graphics cards and RTX AI PC platforms, significantly improving AI performance. The upgrades, ushered in with the release of GeForce Game Ready 555.85 WHQL driver, were announced at Microsoft’s Build conference.

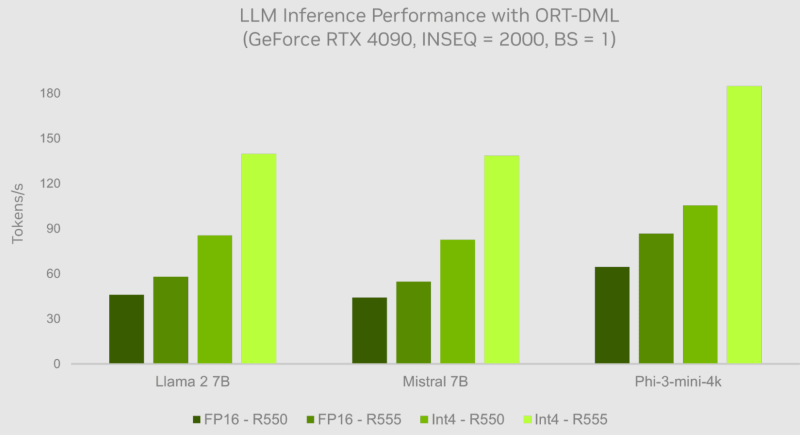

According to Nvidia, the latest enhancements aim to speed up several large language models (LLM) utilized in generative AI. The new Nvidia driver 555 allows RTX AI PC platforms and GeForce RTX graphics cards to triple AI performance for the ONNX Runtime (ORT) and DirectML frameworks. Both tools are used for running AI models in the Windows operating system.

Beyond this, the new driver also boosts the performance of the WebNN interface for DirectML, a tool web developers use to deploy new AI models. Nvidia shares they are working with Microsoft to further improve RTX GPU performance, along with adding DirectML support in PyTorch. The new Nvidia R555 driver offers a variety of features for RTX PC and GeForce RTX GPUs:

- Support for DQ-GEMM meta-command for weight-only INT4 quantization for LLM.

- New RMSNorm normalization methods for Llama 2, Llama 3, Mistral and Phi-3 models.

- Mechanisms for group and multirequest attention, and sliding window attention to support Mistral.

- KV updates for improved attention.

- Support for non-8-multiple GEMM tensors to enhance context phase performance.

In ORT performance tests of Microsoft-released generative AI extensions, the new Nvidia driver resulted in performance increases across all areas, both for INT4 data types and FP16. Optimization methods added in the update boost the performance of large language models like Phi-3, Llama 3, Gemma, and Mistral by up to three times.

Nvidia further highlights the advantages of the RTX ecosystem, which leverages the power of replicable tensor cores. These cores power technologies such as DLSS Super Resolution, Nvidia Ace, RTX Remix, Omniverse, Broadcast, and RTX Video. TensorRT development kits, Maxine, and RTX Video are also available for developers to use tensor cores in accelerating AI operations.

In a press release, Nvidia stated that its GPUs offer AI performance up to 1300 TOPS (trillions of operations per second), far surpassing any other competitive solutions on the market.