In a breakthrough of AI technology, OpenAI has introduced its next-generation AI model GPT-4o this week. The letter ‘o’ in the name stands for ‘Omni’, implying that the model inherently supports multiple input formats—transcending the need to convert all non-text formats to text. Co-founder and President of OpenAI, Greg Brockman, first published an image produced by GPT-4o.

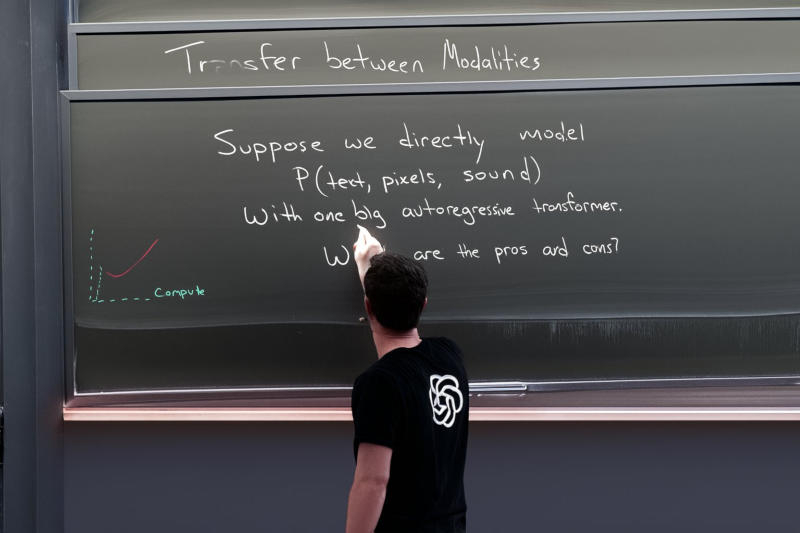

The AI model’s capability to support text, images, and sound as inputs implies its capacity to generate them as well. The above image, a creation of GPT-4o, is not a photograph. It shows a man wearing an OpenAI t-shirt and writing on a board. The partially erased text at the top says ‘Cross-modal Transfer’, followed by a text – ‘Say we’re directly modelling P (text, pixels, sound) with a single autoregressive transformer. What are the pros and cons?’

A closer look reveals some signs indicating the image was created by the AI. The board hangs at an unnatural angle, strangely another board appears beneath it, the man’s arm has an odd shape, and the lighting is uneven. However, what seems unbelievable is the AI’s ability to generate long coherent text segments—something DALL-E 3 finds challenging. GPT-4o’s image generator isn’t yet publicly available. For now, users of the ChatGPT with an integrated next-generation model can only generate images with DALL-E 3. Nevertheless, OpenAI’s President Greg Brockman ensures that substantial efforts are being undertaken to entirely unlock access to the new-generation model.