In an effort to reduce its reliance on external producers such as NVIDIA and AMD, which currently dominate over 70% of the AI accelerator market, Meta will begin implementing its own second-generation AI chips in its data centers this year, according to reports from Reuters. This move towards vertically integrated AI systems using internally developed equipment is becoming increasingly common among tech companies.

Meta’s second-generation AI chip, announced last year, could help the company better manage its growing AI-related expenses. With the increasing use of generative AI in products across Facebook, Instagram, WhatsApp, and hardware devices such as Ray-Ban smart glasses, Meta has been spending billions of dollars on specialized chips and data center upgrades.

Meta’s scale of operations might mean significant savings if its AI chip implementation is successful, says Dylan Patel, founder of the SemiAnalysis chip market research group. Patel estimates that hundreds of millions could be saved annually on electricity costs alone, not to mention billions in chip purchases. He points out that AI system infrastructure and energy requirements have become a significant investment area for tech companies.

A Meta representative has confirmed plans to produce the updated AI chip in 2024, indicating that it will coordinate with hundreds of thousands of existing and new GPUs. “We believe our accelerators complement commercially available GPUs, providing an optimal balance of performance and efficiency in Meta-specific workloads,” the representative said.

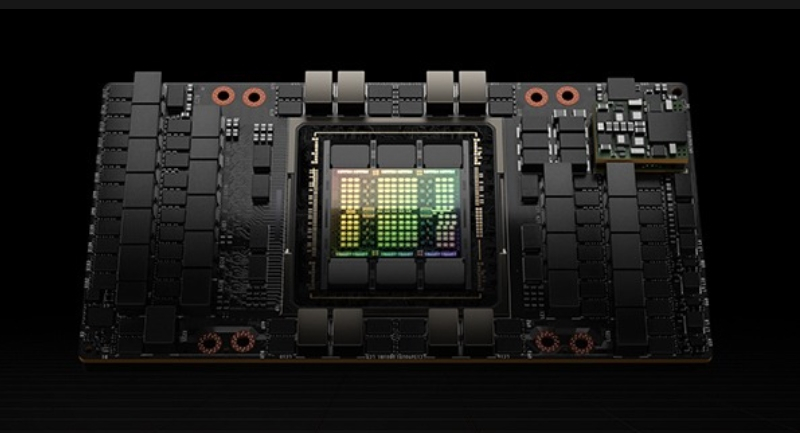

Last month, Meta’s CEO Mark Zuckerberg announced plans to acquire about 350,000 flagship NVIDIA H100 accelerators by the end of 2024. In combination with other systems, this will yield computational power equivalent to 600,000 H100 accelerators.

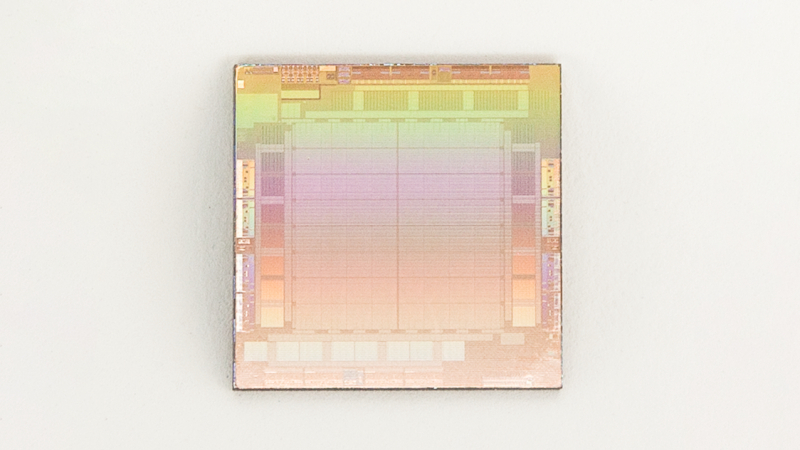

While Meta has developed AI chips in the past, in 2022, the company decided to abandon its first-generation chip and instead purchased billions of dollars’ worth of NVIDIA GPUs. The new chip, codenamed Artemis, will be used exclusively for executing pre-trained neural networks, not for training them. The use of these internally developed chips might prove more energy-efficient for Meta’s tasks than the more power consuming NVIDIA chips. Third-party chips will continue to be used for AI training, although there has been speculation that Meta is also developing a more ambitious chip that could both train and execute neural networks.

Other major tech companies such as Amazon, Google, and Microsoft are also developing their own AI chips. Google and Amazon have long been building chips for their data centers. Last year, Google launched its fastest AI accelerator, the Cloud TPU v5p, and Amazon unveiled its Trainium2 accelerators for training large AI models. Microsoft, too, has developed the Maia 100 AI accelerator and the Cobalt 100 ARM processor, both specifically designed for AI tasks.

According to estimates by Pierre Ferragu from New Street Research, NVIDIA sold 2.5 million chips for around $15,000 each last year. Google, on the other hand, reportedly spent around $2-3 billion to create approximately one million of its own AI chips. In comparison, Amazon reportedly spent $200 million in the past year on 100,000 of its own chips.

Recent reports indicate that OpenAI, the developer of ChatGPT, is also interested in creating its own AI chip. More companies seem to be exploring a move away from dependence on NVIDIA, despite the company’s leading position in the market. With orders filled a year in advance and substantial costs, the need for NVIDIA’s accelerators is exceeding supply.