New Generation of Google’s Language Model Unveiled

Less than two months after the launch of the cutting-edge Gemini neural network, Google has announced its successor. Advanced language model Gemini 1.5 was introduced today. It is now available to developers and corporate users, with plans to soon be attainable for everyday consumers. Google is eager to leverage Gemini as a business tool, personal assistant, among other applications.

Improved Performance and Efficiency

Gemini 1.5 boasts several improvements. The standard-bearing Gemini 1.5 Pro model outperforms Gemini 1.0 Pro by an impressive 87% in tests, placing it on par with the top-tier Gemini 1.0 Ultra. The new model employs the popular “Mixture of Experts” (MoE) approach, which only activates a portion of the model upon request, rather than the whole. This method increases speed for the user while enhancing computational efficiency for Google.

Revolutionary Contextual Window

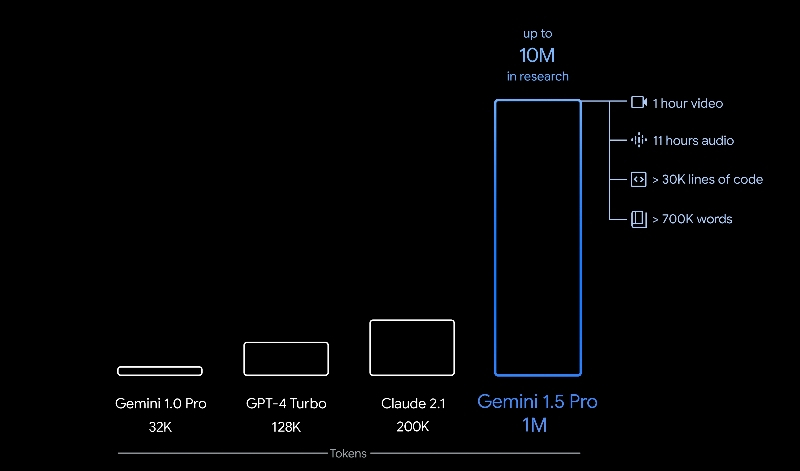

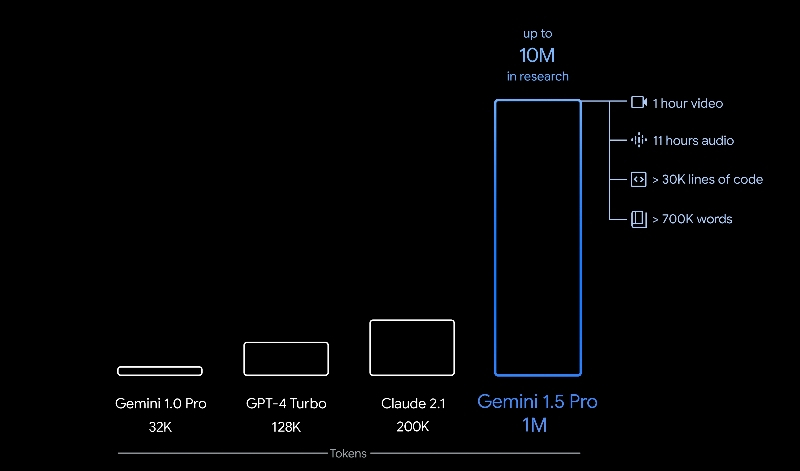

Google CEO Sundar Pichai is particularly proud of Gemini 1.5’s expanded contextual window. It can process substantially larger queries and simultaneously review broader swathes of information. The window’s capacity stands at one million tokens, a substantial increase over GPT-4’s 128,000 tokens by OpenAI and the current Gemini Pro’s 32,000. In terms of size, Pichai likens this to around 10 to 11 hours of video, or tens of thousands of lines of codes. Google researchers are testing a ten-million-token contextual window, equivalent to accommodating an entire ‘Game of Thrones’ series in a single query.

Pichai exemplifies the scope of this context widow by stating that the entire ‘Lord of the Rings’ trilogy could fit into it. While this may seem niche, it is theorized that such capabilities could help Gemini uncover plot continuity errors, interpret Middle Earth genealogies, or comprehend anomalies like Tom Bombadil.

Notable Impact on Business

Pichai believes the advanced contextual window could greatly benefit businesses. He notes, “This will let you provide examples where you can add a lot of personal context and information at the time of the query. He adds, “Consider this as us significantly broadening the query window. He appreciates that filmmakers could upload their entire movie and ask Gemini how critics might respond, or companies could employ Gemini to process substantial financial documents. “I consider this one of the biggest breakthroughs we’ve made“, Pichai adds.

For now, Gemini 1.5 is exclusive to business users and developers, through Google Vertex AI and AI Studio. In time, Gemini 1.5 will succeed Gemini 1.0. The standard Gemini Pro version will be replaced by the 1.5 Pro version, with a 128,000-token-context window. The one-million-token version will come at a premium. Google is simultaneously testing the model’s safety and ethical boundaries, particularly with the new expanded contextual window.

The Ongoing Race of AI

As Google continues to strive towards refining its AI paradigm, companies worldwide are scrambling to identify their AI strategy, including potential collaborations with Google, OpenAI, or other providers. Just recently, OpenAI released a “memory” for ChatGPT and appears to be preparing for a web search market debut. While Gemini’s advanced capabilities are particularly alluring for those already within Google’s ecosystem, there’s plenty of work still to be done.

The Future of AI and User Experience

Eventually, Pichai assures that the distinctions between the various versions of Gemini, Pro, Ultra, and the ongoing corporate battles, will not matter to users. He explains, “People will just consume the best user experience. He compares it to how people use smartphones without focusing on the underlying processor. However, currently, we still care about which chip powers our phone because it matters. “The fundamental technologies are changing so fast”, says the Google CEO. “People still care“, he concluded.