Infineon Unveils High-Powered AI-Ready Power Supplies

German manufacturer Infineon has unveiled a solution to the problem of energy-intensive data centers with a line of high-power power supply units (PSUs) built for today’s and future servers. Data centers for training artificial intelligence (AI) algorithms consume vast amounts of power. Large tech companies endeavor to minimize breakdowns and standardize power supply to these centers. Infineon has now proposed an in-house solution to this issue.

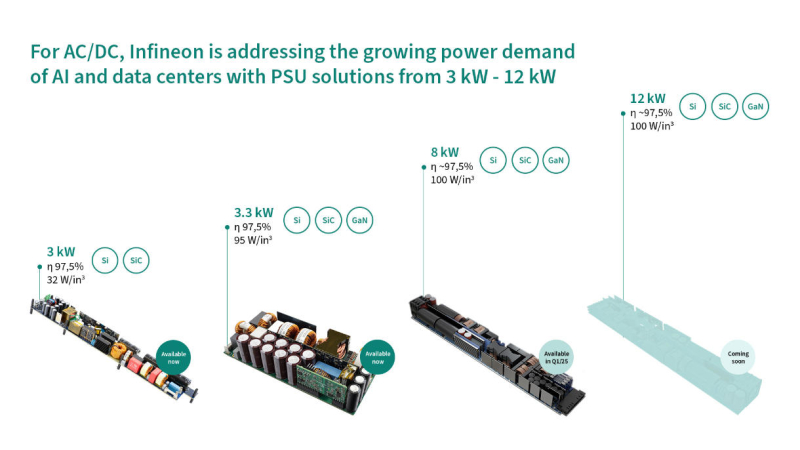

The company has announced next-generation PSUs designed for data centers focused on cloud servers and AI algorithms. These units integrate components from three semiconductor materials on a single module. The first models with a rated power of 8 KW will be available for purchase in the first quarter of 2025, while the release dates of the more potent power supplies are yet undisclosed. The module houses components from silicon (Si), silicon carbide (SiC), and gallium nitride (GaN), a combination that significantly enhances power characteristics, efficiency, and reliability. The 12 KW power supplies will provide an efficiency of up to 97.5%, with 8 KW models suitable for AI racks with a power capability of 300 KW or more.

In the past years, gallium nitride has been instrumental in reducing the sizes of consumer chargers. Silicon carbide ensures high efficiency at elevated voltage levels. SiC is used in DC converters in electric cars and can work in systems supplying high power to AI accelerators. Data Center needs for energy resources have considerably grown due to the wide proliferation of next-gen chatbots and various AI-based services. Infineon emphasizes that these new power supplies will help reduce energy consumption in data centers due to their high efficiency, subsequently reducing greenhouse gas emissions and operating costs.

By 2030, data centers are expected to account for 7% of global energy consumption, according to industry forecasts. Modern AI accelerators consume up to 1 KW per chip, a figure estimated to double by the end of the decade. Major tech companies involved with AI, have already felt the impact of this growing demand for electricity. For instance, Amazon was forced to standardize resources to prevent breakdowns in its data centers in Dublin.