Nvidia showcased its next-generation Artificial Intelligence (AI) accelerators, powered by Blackwell GPUs, at the GTC 2024 conference. According to the manufacturer, these upcoming AI accelerators will be 25 times more energy-efficient and economical compared to Hopper, enabling the creation of even larger neural networks, including large language models (LLMs) with trillion parameters.

About Blackwell GPU Architecture

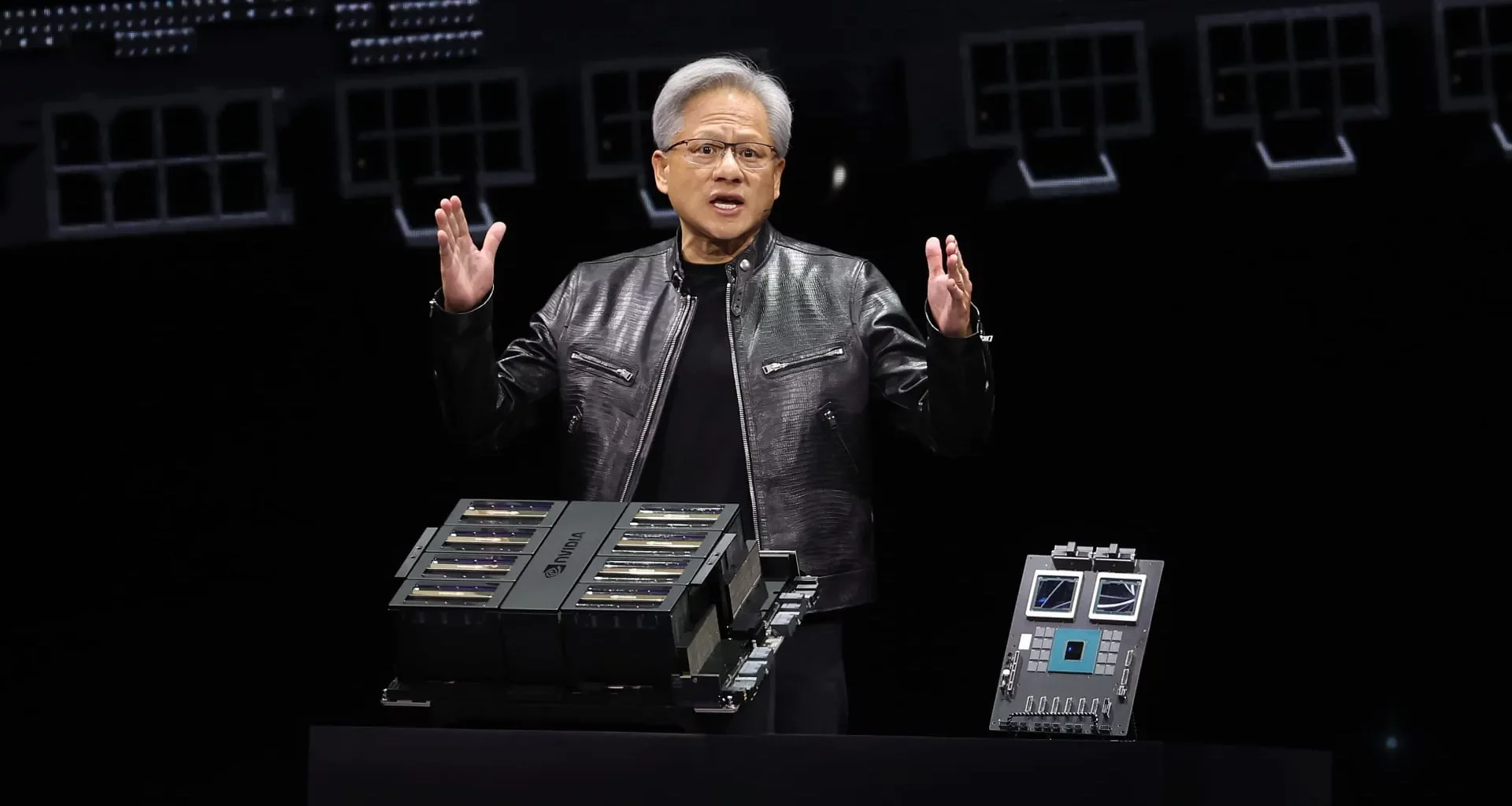

Blackwell GPU architecture gets its name from the American mathematician David Harold Blackwell. It comprises a host of innovative technologies to spur advancements in data processing, engineering modeling, electronics design automation, computer-aided drug design, quantum computation, and generative AI. Emphasizing the importance of the latter, Nvidia CEO Jensen Huang stated, “Generative AI is the defining technology of our time. Blackwell GPUs are the engine for the new industrial revolution.”

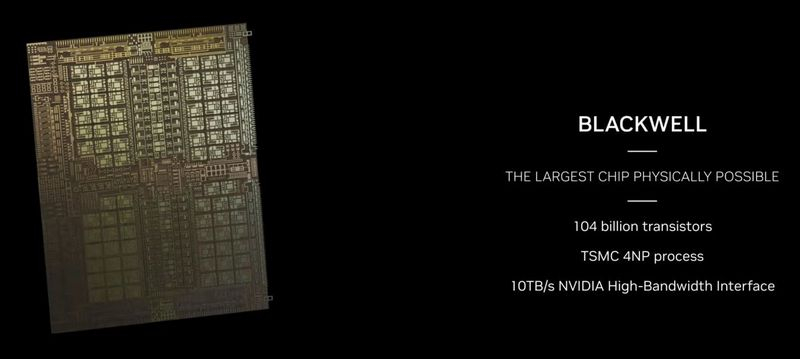

Nvidia’s most potent chip – B200 GPU

Nvidia boldly refers to the B200 GPU as the world’s most potent chip. The new GPU assures up to 20 and 10 PFLOPS in FP4 and FP8 computations, respectively. This GPU, Nvidia’s first with a chiplet layout, is crafted with TSMC’s specialized 4-nm process – the 4NP, and combined through 2.5D CoWoS-L packaging. The B200 GPU comprises 208 billion transistors.

It incorporates eight HBM3e memory stacks, totaling 192GB. The memory bandwidth reaches up to 8 TB/sec. The Blackwell Accelerators in one system are interconnected using the fifth generation NVLink interface, providing a bandwidth of up to 1.8 TB/sec. The new GPU supports NVSwitch 7.2T interface to connect up to 576 GPUs.

Key Enhancements in B200

New tensor cores and the second generation of the Transformer Engine have attributed to B200’s higher performance. The latter refines the necessary computation precision for varying tasks, impacting the speed of training and operating neural networks and the maximum volume of supported LLMs. Now Nvidia’s training supports AI in FP8 format, while FP4 suffices for running trained neural networks. Notably, Blackwell backs various formats, including FP4, FP6, FP8, INT8, BF16, FP16, TF32, and FP64, with sparse computation support in all cases – save the last.

Nvidia Grace Blackwell Superchip – the Flagship architecture

Comprising the B200 GPU pair and the Nvidia Grace central Arm processor with 72 Neoverse V2 cores, the Nvidia Grace Blackwell Superchip is the flagship of this new architecture. The superchip, spanning half a server rack width, has a TDP of up to 2.7 kW. While the FP4 performance reaches 40 PFLOPS, the new GB200 can provide 10 PFLOPS in FP8/FP6/INT8 operations. Nvidia claims that the new GB200 provides a 30-fold increase in performance compared to the Nvidia H100 for tasks related to large language models and is also 25 times more energy efficient and economical.

GB200 NVL72 System and DGX SuperPOD

The GB200 NVL72 system, essentially a server rack, comprises 36 Grace Blackwell Superchips that utilize 1.4 exaFLOPS (FP4) and 720 PFLOPS (FP8). It serves as the building block for Nvidia’s latest supercomputer – the DGX SuperPOD.

Latest server systems by Nvidia

Nvidia also unveiled its HGX B100, HGX B200, and DGX B200 server systems that feature eight interconnected Blackwell Accelerators via NVLink 5. The HGX B100 and B200 have a TDP of 700W and 1000W respectively, delivering up to 112 & 56 PFLOPS, and 144 & 72 PFLOPS in FP4 and FP8/FP6/INT8 operations respectively. Lastly, the feature-packed DGX B200 offers similar performance to the HGX B200 but also contains two Intel Xeon Emerald Rapids central processors.

Nvidia’s Large-scale AI System

Nvidia plans to connect 10,000 to 100,000 GB200 accelerators in a single data center using the Nvidia Quantum-X800 InfiniBand and Spectrum-X800 Ethernet network interfaces. It will provide excellent networking capabilities with a speed of up to 800 Gbps.

Many manufacturers, including Aivres, ASRock Rack, ASUS, Eviden, Foxconn, GIGABYTE, Inventec, Pegatron, QCT, Wistron, Wiwynn, and ZT Systems, will soon introduce their systems based on Nvidia B200. Moreover, Nvidia’s GB200 will soon be available via Nvidia DGX Cloud, and later this year, it will also be accessible from several major cloud providers, including AWS, Google Cloud, and Oracle Cloud.