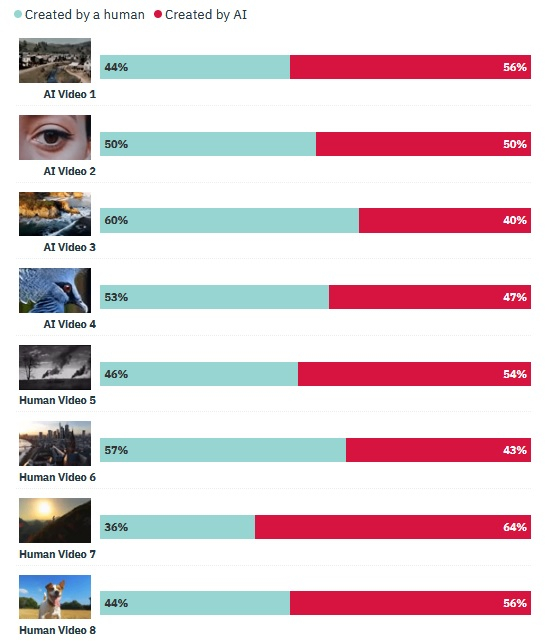

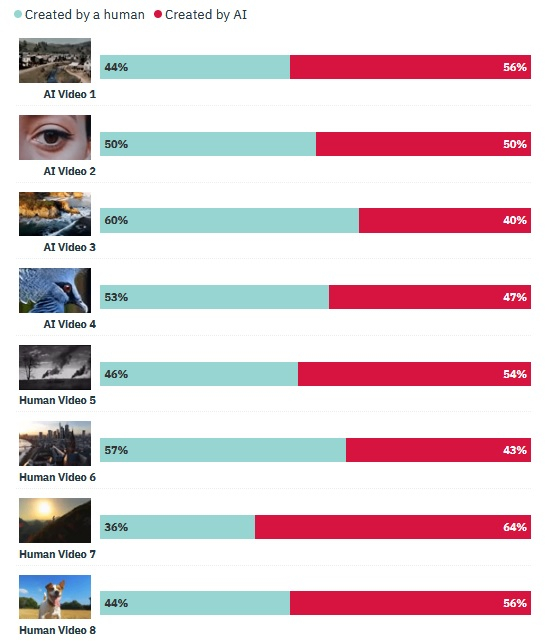

A few weeks ago, Open AI unveiled Sora, a neural network capable of generating realistic video clips of up to one minute in length and 1920 × 1080 pixels resolution following a text description. HarrisX, a research company, recently conducted a survey tasking adult Americans to distinguish AI-generated videos from real ones. It emerged that the majority of respondents erred on 5 out of the 8 clips included in the survey.

The poll, conducted in the US between March 1st and 4th, surveyed over 1,000 American adults. Researchers generated four high-quality videos using the Sora neural network and also selected four short clips shot with a real-world camera. Respondents watched these videos in random order, with the goal of discerning whether a clip was human-made or an AI creation. Opinions diverged, but in 5 out of 8 cases, the majority gave an incorrect answer.

This study suggests that content produced using generative neural networks is increasingly realistic and tougher to distinguish from the genuine. As a result, calls for legislative regulation of this sector are growing louder worldwide. Among other proposals, it is suggested that neural network users should label the generated content accordingly to avoid misleading others and becoming a source of disinformation.

The Sora algorithm, yet to be publicly accessible, is already sparking serious concerns, particularly within the entertainment industry, where the development of video generation technology could have several negative implications for film studios. There’s also an escalating debate over the potential misuse of Sora-like algorithms in creating fake videos featuring politicians and celebrities, which could have unpredictable repercussions.