Following NVIDIA’s recent announcement of the “Chat with RTX” application for launching local chatbots on computers with RTX graphics cards, AMD has now revealed a method to locally operate AI chatbots on one of the Open Large Language Models (LLM) using its processors and graphics cards. AMD suggests utilizing the third-party application, LM Studio for the task.

In order to launch the AI chatbot, a system with a Ryzen AI accelerator is required. However, this is only available in certain Ryzen 7000 and 8000 APU series (Phoenix and Hawk Point), or an AMD Radeon RX 7000 graphics card.

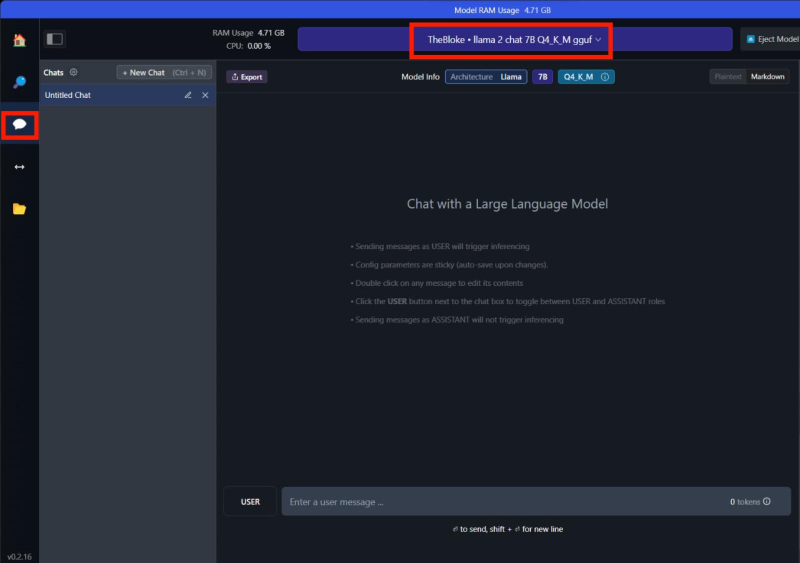

The first step is to install LM Studio for Windows, which requires ROCm support for AI acceleration using a graphics card. Next, the user should enter TheBloke/OpenHermes-2.5-Mistral-7B-GGUF in the search bar to launch the bot based on Mistral 7b, or TheBloke/Llama-2-7B-Chat-GGUF for using LLAMA v2 7b, depending on the model of interest. Following this, the user needs to find and download the Q4 K M model file from the right panel. Finally, the user can head to the chat tab and start interacting with the AI.

For AI acceleration using the Radeon RX 7000 series graphics card, a few more steps are required. Users should ensure the GPU Offload value in the settings is maximized and the Detected GPU Type field displays AMD ROCm.

In contrast to NVIDIA’s chatbot app, AMD has not yet developed its own application for interaction with a chatbot. However, this doesn’t indicate that such a development might not happen in the future.