AMD pledges 100-fold increase in Server Chip Power Efficiency by 2026-27

AMD’s CEO, Lisa Su, recently suggested that their company could significantly improve the power efficiency of server solutions. After the company initially set a goal in 2020 to increase power efficiency by 30 times by 2025, it now appears this target might be boosted to a 100-fold increase by the years 2026-2027.

The announcement was made by Su during the ITF World event in Belgium, hosted by local company Imec, a leader in lithography research and developments. At the event, Su was awarded the prestigious Imec Innovation Award, joining an elite list of past recipients, including Intel’s founder Gordon Moore, TSMC’s founder Morris Chang, and Microsoft’s co-founder Bill Gates. Su’s engagement with AMD spans several years, during which she worked her way up from lower-level roles to her current position as CEO.

Su expressed confidence in AMD’s ability to surpass its 2025 Power Efficiency goals, initially set at increasing data centre power efficiency by 30 times the 2020 levels. Following this, AMD expects to boost the power efficiency of its data centre components by more than 100 times within the subsequent two years. The 30×25 goal set by the company in 2021 followed their 25×20 program launched in 2014, which aimed to improve mobile processor power efficiency by 25 times by 2020. The company exceeded this, achieving a 31.7-fold increase in the power efficiency of its mobile processors.

AMD achieved this significant reduction in the power consumption of their chips in 2023 through chip layouts. Focus on this approach helped the company to cut back carbon emissions last year, matching the total emissions generated in 2022.

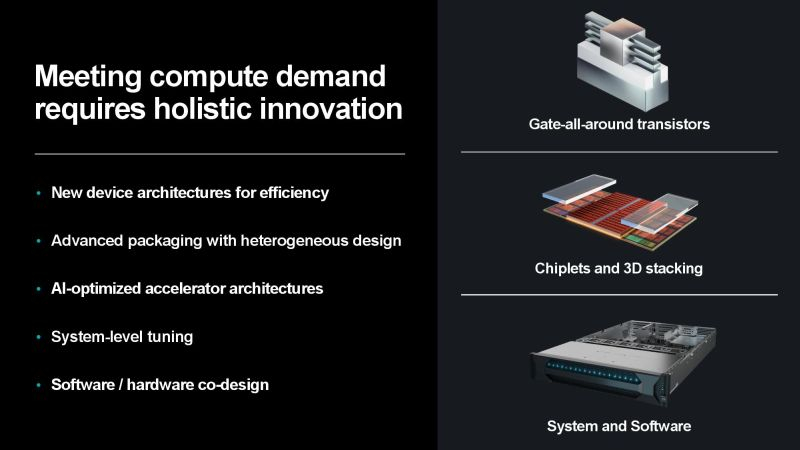

The problem lies in the growing demand for computing power due to the rapid development of AI systems, exceeding the semiconductor industry’s ability to boost computing solution performance. The demand in this area may outpace supply by 20 times, especially considering the size of large language models. Apart from hardware improvements, software optimisation should also be a priority.

According to Su, decreasing computational precision can significantly reduce data centre power consumption. It’s about finding a balance between calculation accuracy and power consumption that minimally affects the performance of the resulting AI systems. Increasing the integration level of computing components will also lower energy consumption. The shorter the interface, the lower the energy cost of transferring information. Increasing power efficiency is possible by integrating HBM-type memory near the computational blocks of accelerators.

For achieving the best results, Su explained, corporations from various industries should cooperate openly. This will inevitably happen as progress will be difficult without collaborative efforts in the current circumstances.

Su openly acknowledged advanced technological processes’ influence on improving chip power efficiency. AMD plans to use a 3-nm process for product manufacturing, combined with GAA transistor architecture. The development of more efficient interfaces and chip layout methods also requires due attention.